Nobody knows for sure, but perhaps the best answer can be found in two studies done by Michael Depreli, one in 2005 and one in 2010.

What Depreli did was have two bots (computer programs) play a series of games against each other. Each bot checked the other’s play and any position where the bots disagreed on what to do was saved and later “rolled out”.

A rollout is basically a simulation. The game is played from the same position over and over again and the results are tabulated. Whichever play wins more often in the rollout is deemed to be the better play. If you do enough trials (to eliminate random error), a rollout is considered much more reliable than the simple evaluation a bot does when it is playing. But a rollout takes a lot of time.

Depreli rolled out the positions and kept score to see which bot was right more often. (“Right” meaning agreeing with the rollout as to which play is best.) This article summarizes the results of Depreli’s studies.

The Bots

The following backgammon programs were included in one or both studies.

- Jellyfish, developed by Fredrik Dahl in 1995. Version 3.5 was released in 1998. Website: http://www.jellyfish-backgammon.com/

- Snowie, developed by Olivier Egger and Johannes Levermann in 1998. Version 4 was released in 2002. Website: http://www.bgsnowie.com/

- Gnu Backgammon, a cooperative effort of many volunteers developed under the terms of the GNU General Public License. Version 0.14 was released in 2004. Website: http://www.gnubg.org/

- BG-Blitz, developed by Frank Berger in 1998. Version 2.0 was released in 2004. Website: http://www.bgblitz.com/

- Extreme Gammon, developed by Xavier Dufaure de Citres in 2009. Version 2 was released in 2011. Website: http://www.extremegammon.com/

The 2005 Study

Snowie 3.2 and Gnu Backgammon 0.12 were set up to play a session of 500 games against each other. The bots played at their best settings at the time—3-ply for Snowie and 2-ply for Gnu Backgammon.

All positions from all the games were analyzed by both bots (and later by Jellyfish and later versions of the original bots). It turned out that in 626 positions one or more bots disagreed on the correct checker play or cube action.

The 626 positions were rolled out using Snowie 3.2, or sometimes Snowie 4 for trickier positions, and statistics were gathered on how well each bot performed compared with the rollout results. The following table shows the total cost of the errors by each bot.

Bot Comparison — 2005 Study

| Bot, Version | Checker Errors | Cube Errors | Total Errors | ||||||

| Double/No Double Errors | Take/Pass Errors | Cube Errors | |||||||

| Missed Double | Wrong Double | Total | Wrong Take | Wrong Pass | Total | ||||

| Gnu BG 0.14 | 8.175 | 0.728 | 0.323 | 1.051 | 1.392 | 0.391 | 1.783 | 2.834 | 11.009 |

| Gnu BG 0.13 | 8.949 | 0.728 | 0.342 | 1.070 | 1.631 | 0.255 | 1.886 | 2.956 | 11.905 |

| Snowie 4 | 8.744 | 1.030 | 0.862 | 1.892 | 1.530 | 0.365 | 1.895 | 3.787 | 12.531 |

| Gnu BG 0.12 | 10.571 | 0.964 | 0.960 | 1.924 | 1.344 | 0.691 | 2.035 | 3.959 | 14.530 |

| Snowie 3 | 14.292 | 2.092 | 0.404 | 2.496 | 2.613 | 0.855 | 3.468 | 5.964 | 20.256 |

| Jellyfish 3.5 | 20.109 | 2.602 | 1.074 | 3.676 | 4.291 | 0.598 | 4.889 | 8.565 | 28.674 |

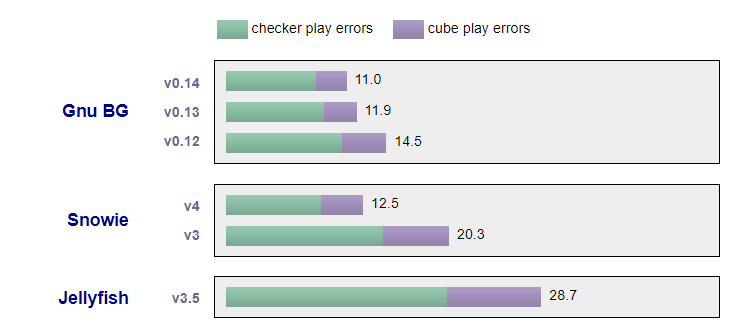

Here is a graphical representation of the data

You can see from the charts that the latest versions of Gnu Backgammon and Snowie are almost tied in strength. Jellyfish trails the other two, but it is worth remembering that even Jellyfish plays a very strong game (comparable to the best human players in the world).

The 2010 Study

This time Snowie 4 and Gnu Backgammon 0.14 were pitted against each other in a 500-game series. Four bots (BG-Blitz, Extreme Gammon, Gnu Backgammon, and Snowie) analyzed the games. Any position where one bot disagreed with the others was rolled out using Gnu Backgammon—a total of 4903 checker plays and cube actions.

The following table shows the total cost of errors by each bot. Note that, even though both the 2005 and 2010 studies analyzed 500 games, more positions are included in the 2010 table. This is for a couple reasons:

- More bots (and different settings) were used in the 2010 study, so more chance for disagreement.

- The 2005 study used only positions where a bot made an error >0.029, whereas the 2010 study used all positions where a bot made any error.

Bot Comparison — 2010 Study

| Bot, Setting | Look- ahead | Checker Errors | Cube Errors | Total Errors | ||||||

| Double/No Double Errors | Take/Pass Errors | Cube Errors | ||||||||

| Missed Double | Wrong Double | Total | Wrong Take | Wrong Pass | Total | |||||

| eXtreme, XGR+ | 13.397 | 1.088 | 0.658 | 1.746 | 0.970 | 0.241 | 1.211 | 2.957 | 16.354 | |

| eXtreme, XGR | 22.269 | 1.661 | 0.783 | 2.444 | 2.173 | 0.264 | 2.437 | 4.881 | 27.150 | |

| eXtreme, 5-ply | 4 | 17.169 | 1.507 | 0.789 | 2.296 | 2.859 | 0.450 | 3.309 | 5.605 | 22.774 |

| Gnu BG, 4-ply | 4 | 21.599 | 2.663 | 0.644 | 3.307 | 4.061 | 0.127 | 4.188 | 7.495 | 29.094 |

| eXtreme, 4-ply | 3 | 22.967 | 0.426 | 1.647 | 2.073 | 0.818 | 0.555 | 1.373 | 3.446 | 26.413 |

| Gnu BG, 3-ply | 3 | 29.313 | 0.903 | 10.276 | 11.179 | 0.775 | 5.880 | 6.655 | 17.834 | 47.147 |

| eXtreme, 3-ply | 2 | 27.814 | 1.831 | 1.528 | 3.359 | 3.996 | 0.520 | 4.516 | 7.875 | 35.689 |

| Gnu BG, 2-ply | 2 | 33.247 | 2.763 | 1.670 | 4.433 | 4.261 | 0.476 | 4.737 | 9.170 | 42.417 |

| Snowie 4, 3-ply | 2 | 37.424 | 1.922 | 1.139 | 3.061 | 3.651 | 0.867 | 4.518 | 7.579 | 45.003 |

| BG Blitz, 3-ply | 2 | 41.286 | 1.692 | 17.263 | 18.955 | 4.168 | 2.159 | 6.327 | 25.282 | 66.568 |

Source: http://www.bgonline.org/forums/webbbs_config.pl?noframes;read=64993

Rollout parameters: Gnubg 2-Ply World Class, 1296 trials or 2.33 JSD, minimum 720 trials. Opening rolls and replies rollout data from Stick’s Gnubg rollouts at www.bgonline.org as of April 2010.

The Rollout Team: Darin Campo, Miran Tuhtan, Misja Alma, Mislav Radica, Neil Kazaross, Robby, and Stick and those who helped him with the opening moves and replies rollouts.

Notes: XGR does a 6-ply truncated rollout of 3600 games using one ply of lookahead for the first two cube decisions and no lookahead for the other decisions. XGR+ does a 7-ply truncated rollout of 360 games with variance reduction using one ply of lookahead for cube decisions and the first two checker plays and no lookahead for the other plays.

Maik Stiebler took Depreli’s data and calculated the error rate for each bot. This assumes the rollout data we are comparing to is correct. (Rollouts are not 100% correct. There is some systematic error, because the bot makes errors in play, and some random error, because of luck of the dice.) It’s not clear whether more accurate rollout data would increase or decrease these implied error rates.

| Bot, Setting | Total Error | Error Rate |

| eXtreme, XGR+ | 16.354 | 0.425 |

| eXtreme, XGR | 27.150 | 0.705 |

| eXtreme, 5-ply | 22.774 | 0.591 |

| Gnu BG, 4-ply | 29.094 | 0.755 |

| eXtreme, 4-ply | 26.413 | 0.686 |

| Gnu BG, 3-ply | 47.147 | 1.224 |

| eXtreme, 3-ply | 35.689 | 0.927 |

| Gnu BG, 2-ply | 42.417 | 1.101 |

| Snowie 4, 3-ply | 45.003 | 1.168 |

| BG Blitz, 3-ply | 66.568 | 1.728 |

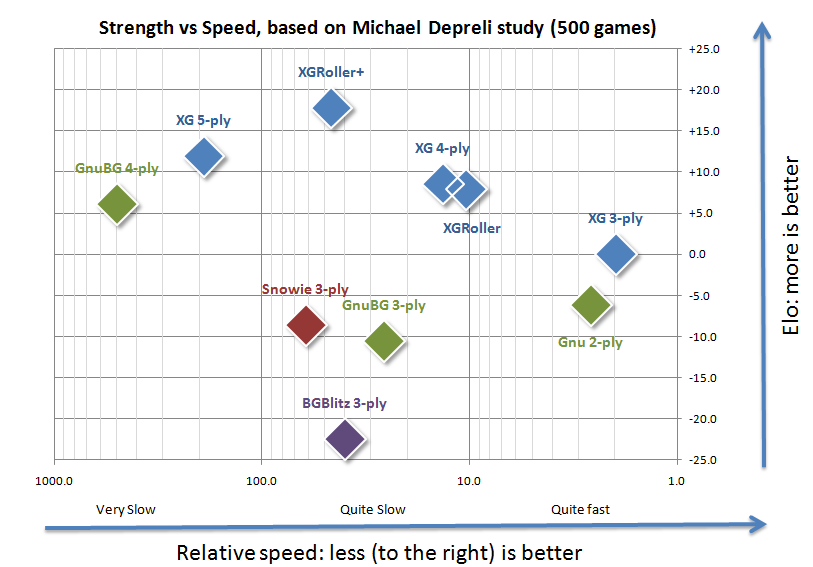

Another important consideration for users of backgammon software is the speed of the bot at evaluating plays, because this affects the speed of rollouts. For example, Extreme Gammon and Gnu Backgammon run signficantly faster than Snowie and BG-Blitz—so much faster that they can look ahead an extra ply without using more time. Xavier Dufaure de Citres (developer of Extreme Gammon) prepared a chart showing how the different bots and differents settings relate to each other for accuracy and speed.

Note: Speed test was done by Xavier on a core i7-920 processor. A different processor might produce different relative speeds.

2012 Update

Extreme Gammon Version 2 was released in 2011. Here is an updated table which includes XG v2 and was done with much stronger rollout settings: XG v2, 3-ply checker, XG-roller cube, minimum 1296 trials, no maximum until error is less than 0.005.

Many thanks to the rollateers: Frank Berger, Xavier Dufaure de Citres, Neil Kazaross, Michael Petch, and Miran Tuhtan.

Bot Comparison — 2012 Update

| Bot, Setting | Checker Errors | Cube Errors | Total Errors | ||||||

| Double/No Double Errors | Take/Pass Errors | Cube Errors | |||||||

| Missed Double | Wrong Double | Total | Wrong Take | Wrong Pass | Total | ||||

| XG v2, XGR++ | 3.668 | 0.115 | 0.223 | 0.338 | 0.199 | 0.028 | 0.227 | 0.564 | 4.232 |

| XG v2, XGR+ | 5.458 | 0.479 | 0.261 | 0.740 | 0.391 | 0.020 | 0.411 | 1.151 | 6.608 |

| XG v2, 5-ply | 7.082 | 0.968 | 0.392 | 1.360 | 0.618 | 0.104 | 0.722 | 2.082 | 9.163 |

| XG v2, 4-ply | 9.787 | 0.356 | 1.159 | 1.515 | 0.566 | 0.160 | 0.726 | 2.241 | 12.028 |

| XG v1, XGR+ | 9.226 | 1.696 | 0.665 | 2.361 | 1.686 | 0.030 | 1.716 | 4.077 | 13.303 |

| XG v2, XGR | 12.843 | 0.495 | 0.989 | 1.484 | 0.401 | 0.160 | 0.561 | 2.044 | 14.887 |

| XG v2, 3-ply | 13.199 | 1.214 | 1.003 | 2.217 | 0.704 | 0.196 | 0.900 | 3.116 | 16.316 |

| XG v1, 5-ply | 12.853 | 2.185 | 0.932 | 3.117 | 3.151 | 0.367 | 3.518 | 6.635 | 19.488 |

| XG v1, 4-ply | 17.917 | 0.656 | 1.740 | 2.396 | 1.046 | 0.401 | 1.447 | 3.843 | 21.760 |

| XG v1, XGR | 16.271 | 2.417 | 0.915 | 3.332 | 2.558 | 0.070 | 2.628 | 5.960 | 22.232 |

| XG v2, 3-ply red | 19.689 | 1.424 | 1.160 | 2.584 | 0.704 | 0.196 | 0.900 | 3.484 | 23.173 |

| XG v1, 3-ply | 22.202 | 2.217 | 1.634 | 3.851 | 4.400 | 0.776 | 5.176 | 9.027 | 31.228 |

Source: http://www.bgonline.org/forums/webbbs_config.pl?noframes;read=114355

Comparison of Rollouts

How do the GnuBG rollouts that were used as “ground truth” for the original table stack up against the new rollouts? Xavier Dufaure de Citres supplies the following data:

| Checker Errors | Cube Errors | Total Errors | |||||||

| Double/No Double Errors | Take/Pass Errors | Cube Errors | |||||||

| Missed Double | Wrong Double | Total | Wrong Take | Wrong Pass | Total | ||||

| Gnu BG Rollout | 5.488 | 0.333 | 0.312 | 0.645 | 0.171 | 0.035 | 0.206 | 0.851 | 6.339 |

Some portion of the reported difference will be from errors in the new rollouts, but presumably, a much larger portion of the difference is from errors in the original GnuBG Rollouts.

Summary and Comments

- The programs rank from strongest to weakest roughly as follows: Extreme Gammon, Gnu Backgammon, Snowie, BG-Blitz, and Jellyfish.

- A truncated rollout (such as XGR+) can produce a better speed/accuracy tradeoff than a deep-ply full evaluation. This suggests a useful feature for future bots: a super rollout, where each play is determined by a mini rollout.

- In Gnu Backgammon, you should not use 3-ply evaluation for cube decisions. Always use even ply, either 2-ply or 4-ply.

- BG-Blitz does almost as well as Snowie at checker play. It just needs to improve its cube play.

- These studies focus on positions that come up in bot-versus-bot play. Human-versus-human play might yield different types of positions. For example, some people say that Snowie is stronger at back games. If this is true, and if humans play more back games than computers do, a study of human-play positions might be more favorable to Snowie.

Daniel Murphy: Interesting that Gnu BG 4-ply (ER 0.755) ranks nearly as high as XGR (ER 0.705) (but is much, much slower) and, although both Gnu BG 2-ply and Gnu BG 3-ply performed much worse than that, if a row were added for Gnu BG using 2-ply for cube decisions and 3-ply for checker play, that combination would rank nearly as high as eXtreme 3-ply. No idea how that combo would fare in the speed vs. strength table).

Christian Munk-Christensen: What was the final score (which bot won and by how much)?

Tom Keith: I don’t know if Michael ever said—and perhaps it is better that way. These bots are so close that the result will be completely determined by luck anyway. Even with variance reduction, 500 games is too few to get a meaningful result. That’s why Michael concentrated on positions where the bots disagreed. It’s not exactly the same as a straight bot-versus-bot contest, but it gives a good indication of which bot would win in a straight contest, if carried on long enough.

Nack Ballard: Does that mean that Gnu 2-ply is reported as 23 times faster than Snowie and only 1.4 times as slow as XG (3-ply)? That isn’t remotely close to the rough estimates I’ve heard of Gnu/Snowie = 2.5 and XG/Gnu = 3.5. What am I misunderstanding?

Xavier Dufaure de Citres: All speeds are based on the analysis of two sessions (one money session and a 25-point match). One exception is BG-blitz where the speed was determined based on rollout speed compare to XG’s. It is important to point that speed depends greatly of the processor used. Snowie is single-threaded, this means it can only use one core of your processor, while all the other programs will use all the cores/threads available. I used my core i7 920 for that analysis, which has four cores and eight threads. Now the speed of Analyze is one thing and speed of Rollout is another. I think the number you were referring are about rollouts. For rollout, yes, XG edge seems to be more than on a plain Analyze of a match.

Article By Tom Keith